My name is Imen GHAZOUANI. I am a second-year industrial engineering student at National school engineering of Tunis (ENIT) who is passionate about artificial intelligence and entrepreneurship. One of my goals is to use AI solving real-world problems!

The importance of data pre-processing

The performance of a Machine Learning (ML) model on a certain task depends on a variety

of parameters. The instance data’s both representation and quality come first and foremost.

Pattern recognition during the training phase is more challenging if there is a lot of redundant

and irrelevant information present (in other words: noisy and untrustworthy data). Along with

that fact, it is also widely recognized that preprocessing time for ML problems is significantly

increased by the data preparation and filtering stages.

Data pre-processing covers feature extraction and selection, feature normalization, and data

cleansing. The product of data pre-processing is the final training set. It would be, therefore,

efficient if a single sequence of data pre-processing algorithms had the best possible

performance for each data set. That is a real challenge!

The problem to solve!

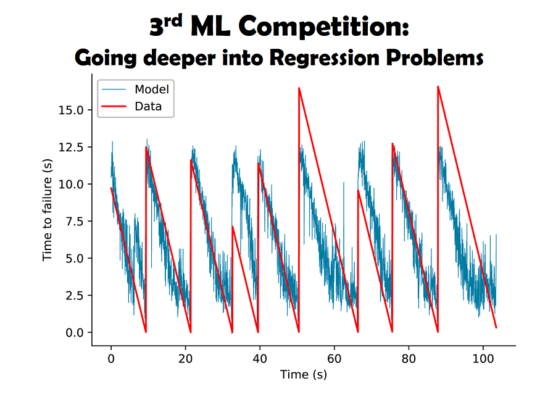

“A challenging anonymous regression problem with multiple numerical, categorical,

and textual variables will be the focus of the competition. The data has been

anonymized in order to "discourage" participants from developing very

dataset-specific ideas, and "push" them to find new approaches to automating their

predictive modeling and training processes. The textual features have been encrypted

without harming their predictive power. They might be still informative if you find the

right way to derive insights from them. The main objective of this challenge is to find

the “best” Regression pipeline that predicts the target variable "y" using the provided

features as described below.”

My motivation participating in the competition

As an intern within BUSINESS & AI company I had the opportunity to learn new skills and

delve deeper into Business analysis, and data science world; gain proficiency in automated

data cleansing and prediction modeling.

In fact, BUSINESS & AI periodically holds competitions that are accessible to everyone

worldwide! In order to push interns’ boundaries to their absolute limits, That is, of course,

serving opportunity of excellence to the AI enthusiasts. Therefore, there was no way I could

have missed the chance of competing;the first place definitely looked tempting to me!

My approach solving the problem

In order, to solve the problem in a very efficient way, these are the steps I followed:

- Understanding the problem and dataset.

- Pre-processing the data: Data cleansing, outlier removal, Normalization Standardization, Dummy variable creation.

- Feature engineering : Feature selection, Feature transformation and Feature creation.

- Selecting the modeling algorithm.

- Parameter tuning through GridSearchcv.

- Building the ML model pipeline.

- Using an advanced learning approach.

- Checking the results by making a submission.

Concerning the second and third bullet points (2 and 3), I worked on the different categorical, numerical, and textual features apart. And I can tell, it really made a difference! Thus creating three different pipelines preprocessing these different variables.

At the end of this, a pipeline that I called ML_pipeline brought them all together along with the regression technique that I used which was the ElasticNet.

Tools used during the competition

- Google Colab (as the framework).

- Python (as a coding language).

- scikit-learn (as the main library, which supports NumPy and SciPy as well as a number of techniques like support vector machines, random forests, and k-neighbors).

What was challenging the most?

The most challenging part for me, was dealing with the text variables. In fact, the text features were encrypted without harming their predictive power by the company. Therefore, I had to proceed to the cleaning before even using the pipeline I implemented for these kind of features.

EndNotes

If you are passionate about data science, set huge goals, push yourself, and never stop learning!

The secret is to be self-disciplined and to have a strong will !

Make a distinction!