My name is Wassim CHOUCHEN. After taking my baccalaureate diploma in science field, I completed the two years of Pre-Engineering studies at IPEIM where I gained a solid mathematics background. Currently I am first year computer science engineering student at ENSI.

Competition summary

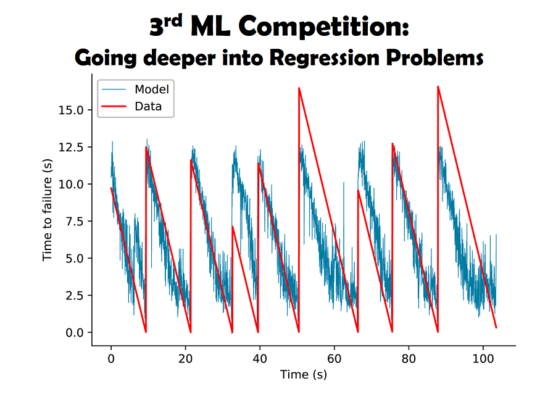

The competition was mainly a regression problem where we developed a machine learning model which predicts a continuous variable using machine learning pipelines.

Participating incentive

I’m too enthusiastic about AI and its real-world applications. Actually, I participated in many competitions even when I was still discovering the domain because I believe that every competition is going to add something new to my knowledge.

I notice that these days I am becoming somehow addicted to playing competition in Zindi. When I saw the competition announcement on Linkedin, I didn’t hesitate to get up, open my PC and send an email to BUSINESS & AI Team to join the competition although it was late at night.

Approaching the Problem

As a first step, I tried to understand the problem and the role of each feature.

At the beginning, it wasn’t easy as there is no explanation for each feature as in traditional competitions. So, exploring the data and trying to extract the correlation between the features and the target were not that easy.

I continued with the cleaning phase. I noticed that there were features with 90% of missing value, so deleting them was an obligation. Indeed to fill them with the mean value or with the mode value : the most appeared value was an unworthy risk to take, besides, filling them with any other method like Regression Imputation or stochastic Regression Imputation will certainly take too much time.

For features with less than 50% of missing value I filled them using MICE (Multiple Imputation by Chained Equations) which is a robust method of dealing with missing data. Next, I generated some linear statistical features for every sample like “min” , “max”,”mean”, “standard deviation” to help the model generalizing. Then I noticed that the variation between features and the distance between data point was high. So I scaled the data using MinMaxScaler. Finally I splitted the data and I fitted it to the model in appropriate way.

Insights

The most important insight I could get is that the linear correlation between features was too low. The linear correlation with the target variable “y” was low too which pushed me to invest more on Exploratory Data Analysis.

Tools

- Exploratory data analysis for exploring data, Scatter Plot, Heat-map, Correlation matrix : I spent time exploring the range of features , their quantiles , the linear correlation between features themselves and between features and target.

- Multiple Imputation by Chained Equations MICE : Dealing with missing data in an appropriate way is one of the most powerful steps in any pipeline so I choose MICE because I am used to it and everytime it gives me the best result. MICE is a robust, informative method of dealing with missing data in datasets. The procedure ‘fills in’ (imputes) missing data in a dataset through an iterative series of predictive models.

- Sentences transformer : (all-MiniLM-L6-v2) to encode text data so I can fit it to the model

- MinMaxScaler for scaling data : I used MinMaxScaler to put features between 0 and 1 , in addition the standard deviation of the most features was small so it was the most suitable method in our case

- XGBoost and LightGBM as regressor model : Two of the most popular algorithms that are based on Gradient Boosted Machines :Gradient Boosting refers to a methodology in machine learning where an ensemble of weak learners is used to improve the model performance in terms of efficiency, accuracy, and interpretability. XGBoost and LightGBM are the most used models in the winning solution in recent year.

Time

The time for this competition was a little bit limited so I tried to hurry up at every step. There were a lot of things I didn’t have time and the necessary concentration to do like generating non-linear statistics, dealing with temporal features in other ways or trying a more robust model. That’s probably why I couldn’t achieve a good rank.

Endnotes

In this competition I had the chance to deal with text and tabular data at the same time, which is the first time for me, and how to develop a pipeline in a short time.

Advices

One day someone said to me “Use Machine learning to solve real world problems not to get the highest accuracy”, so seeing AI solving our daily problem is a pleasure not everyone could enjoy.

I advise everyone involved in the AI field to participate in ML competitions because with every competition you are going to learn something new and improve your real-world problem solving tactics.